Forward Future Episode 23

LLaMA 3, Evidence of Aliens, iPhone 15 Launch, Tesla Dominates AI, AI-Flavored Coke

This week was absolutely insane. So many incredible things happened in the world of AI and technology. Apple launched a bunch of AI features without saying AI once, Coke introduced a new flavor created by AI, Mexico may have evidence of aliens, prisoners in Finland are training AI models, Meta is already developing their next AI model with the target of being better than GPT4, Tesla is dominating the self-driving race, and so much more!

AI-Flavored Coke

Our first story is about Coca-Cola. With the help of artificial intelligence, the beverage giant has created a new flavor of Coke. Count that as a sentence I never thought I'd say. This new flavor, which mostly tastes like Coke with a little extra something, is supposed to taste like the future. Coke is an old product, and they must keep inventing new ways to appeal to the younger generations, many of whom view sugary soda beverages as a detriment to their health. So, tapping AI to help create a new flavor is their attempt to stay relevant. According to a CNN article: “The company relied on regular old human insights by discovering what flavors people associate with the future. Then it used AI to help figure out flavor pairings and profiles…Coca-Cola used AI-generated images to create a mood board for inspiration” It does seem like AI is invading every aspect of our lives at this point.

Apple Announcements

Next, let’s talk about Apple’s big launches this week at their event called “Wonderlust”. Apple is in an interesting position, having hardware in millions of people’s pockets that potentially can power AI models. They push every chip upgrade to include more power for AI models, even in the Apple Watches. Yet, they have never mentioned artificial intelligence, the opposite strategy of their competitors at Microsoft and Google. I’ve already reported that they are working on their own LLM, Ajax. And AI-powered features are everywhere, including noise cancellation, blurring portraits, and predictive text. They even included a new “action” button, the first real button change on the phone in years, which will be perfect for quick access to an AI assistant and currently can trigger Siri actions.

I predict they will launch an incredibly capable version of Siri, powered by Ajax, that runs almost entirely on-device. Apple silicon is secretly already great at running AI models, and as more support for Apple silicon for AI models is released, it will only get better. But they probably will call it Siri and won’t mention AI. Imagine a version of Siri that runs locally on-device, no need for the internet, and has open interpreter-like capabilities that can execute multi-step commands. This is what Siri should be, instead of just a way to set timers and remind me of things.

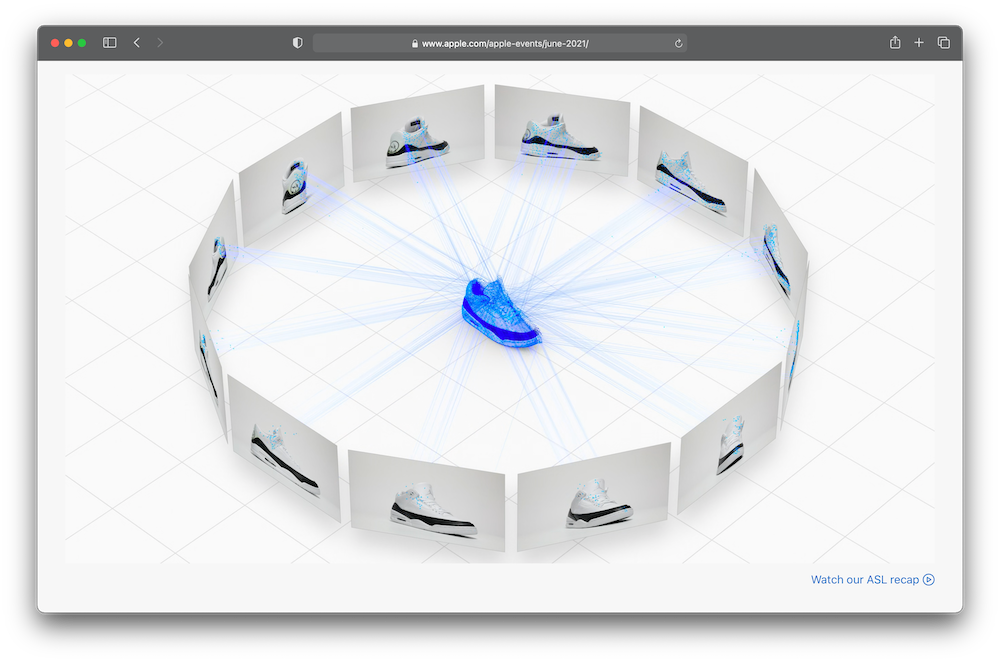

Apple Vision

Apple also is going all in on spacial video. In preparation for the Apple Vision launch, the new iPhone 15 pro will include cameras that can capture spacial photos and video. This means you’re taking a 3D video that Apple’s upcoming VR device can view. Apple also launched an insane feature called object capture that allows a camera to render 3D objects just by photographing it. This, again is in preparation for putting things in the 3D world within the Apple Vision headset. Check out this example. Pretty incredible. I know the price point for Apple Vision is ridiculous, but I’m still really excited about it.

Prisoners Training AI

Next, prisoners in Finland are working to train AI models. An article by Wired.com describes a woman sitting in front of an HP laptop, helping to train an AI model by answering questions by reading a paragraph of text and answering questions like “is the previous paragraph referring to a real estate decision, rather than an application?” According to the article, “During three-hour shifts, for which she’s paid €1.54 ($1.67) an hour, the laptop is programmed to show Marmalade short chunks of text about real estate and then ask her yes or no questions about what she’s just read.” The woman isn’t completely aware of why she’s doing this work, but it turns out she’s helping train a large language model by a Finnish startup called Metroc, which is creating a search engine to help construction companies find newly approved building projects. But why did this startup turn to prison workers? By hiring prisoners, they could get cheap, Finnish-speaking labor while offering the benefit of preparing prisoners for a post-release life in the digital world. The work is optional, and the alternatives are physical labor jobs, so in my mind, why not offer this? But, something does feel a little strange about it. What do you think?

Aliens?

Let’s talk about aliens. Yes, aliens. In news that went utterly viral this week, the Mexican congress had a publicly held meeting where they displayed two potential alien corpses found in Cuscu, Peru. According to the Independent’s article: “The event was spearheaded by journalist Jaime Maussan, who claimed, under oath, that the mummified specimens are not part of “our terrestrial evolution,” with almost a third of their DNA remaining “unknown.” Additionally, “The claims by the self-claimed ‘ufologist’ have not been proven, and Mr Maussan has previously been associated with claims of discoveries that have later been debunked.” Although this is another likely fake, the fact that the Mexican government platformed it is exciting. Now, we get to bear witness to the most fantastic race of all. Who’s going to enslave humans first, aliens or AI?

Stable Audio

There were a bunch of new AI launches this week, so I’ll go through them quickly. First, Stability AI continues to contribute to the open-source community with the launch of Stable Audio, their version of text-to-audio. With Stable Audio, you can generate music, solo instruments, sound effects, and more. Everything is open-source and freely available to use, including the demo, which is available on their website.

Slack AI

Next, Slack is releasing AI functionality. As a Slack user for over a decade, this might be one of the most valuable AI launches I’ve seen. With Slack’s AI, you can summarize threads, recap channel highlights, and search for answers within your messages. Slack is an incredible tool but suffers from severe signal-to-noise issues. A vast amount of business-critical information is lost in Slack, so getting answers from the entire history of your conversations will be incredibly valuable. I can’t wait to try this out.

Claude Pro

Next, in direct competition with ChatGPT Plus, Anthropic has launched a paid version of its Claude AI service. Claude was founded by ex-OpenAI employees, and its performance has been comparable to ChatGPT's. For $20/mo, customers will get 5x more usage, the ability to send many more messages, priority access during high-traffic times, and early access to new features. The main difference between Claude Pro and ChatGPT Plus is Claude doesn’t have different tiers of GPT, such as GPT3.5 and GPT4. To be honest, I haven’t tested Claude much. Should I? Let me know in the comments.

Character AI

Next, CharacterAI is making massive gains on ChatGPT in mobile app usage in the US, according to an article by robots.net. I haven’t had much need for creating characters, so I haven’t used this product much. What are some of the use cases you’re using role-playing AI for? But many other people in the US are using it, with the app generating 4.2 million monthly active users. Character.AI’s demo also tends to skew younger, with 60% of its audience between the ages of 18-24. Character.ai only launched in May this year, so their growth is impressive. I’m still trying to figure out how to integrate it into my life, though.

Tesla AI

Next, let’s talk about Tesla AI. Many people have said other car makers are not far behind Tesla regarding autonomous driving capabilities. Still, new excerpts from Walter Isaacson’s new book profiling Elon Musk spell a different story. Historically, self-driving cars, including Tesla’s, have been built using a mixture of neural networks and hundreds of thousands of lines of code. But not long ago, Musk and the team decided to go full AI, which significantly reduces complexity and can likely handle edge cases much more effectively. Their AI is based solely on AI learning from the video recorded by every Tesla on the road. And that’s where Tesla has a significant advantage. Musk decided many years ago to put cameras in every Tesla and record all that video. They currently have millions of miles worth of driving data and are using that to train their pure neural network models. And because of this, they are likely way ahead of their competitors, most of which still don’t install cameras in every car. All of Tesla’s newest versions of full self-driving are based on pure AI. I tested it myself a few months ago, and it was impressive but still failed at times. And if I’m being honest, it took more mental effort to watch the car in full self-driving mode than it did for me to drive. But with more training and more exposure to it, I believe that will change.

LLaMA 3

Next, not to rest on its laurels, Meta is already gearing up for their next version of LLaMA, with a specific target of equal or better quality to GPT4. According to the article in The Verge, “Meta has been snapping up AI training chips and building out data centers to create a more powerful new chatbot it hopes will be as sophisticated as OpenAI’s GPT-4” and The company reportedly plans to begin training the new large language model early in 2024, with CEO Mark Zuckerberg pushing for it to once again be free for companies to create AI tools with.” Also, “The Journal writes that Meta has been buying more Nvidia H100 AI-training chips and is beefing up its infrastructure so that, this time around, it won’t need to rely on Microsoft’s Azure cloud platform to train the new chatbot.” You already know I’ll create test and tutorial videos as soon as the new model is released. Once again, I’m highly impressed by Meta’s continued contributions to the open-source community.

AI Stock Trades

For our next story, AI is now entering our financial markets. This week, Nasdaq received SEC approval for AI-based trade orders. According to an article from Cointelegraph, “Called the dynamic midpoint extended life order (M-ELO), the new system expands on the M-ELO automated order type by making it “dynamic,” meaning it will use artificial intelligence to update and, essentially, recalibrate itself in real-time.” In basic terms, order types are software instructions that execute trades according to market prices. Now, these orders will be recalibrated in real time using reinforcement learning. According to a post by Nasdaq, “Calculated on a symbol-by-symbol basis, this new functionality analyzes 140+ data points every 30 seconds to detect market conditions and optimize the holding period prior to which a trade is eligible to execute.” What all of this technical talk means is that more trades will occur without a significant price increase.

Copilot Copyright Guarantee

This week, there was a lot of movement in the safety, security, and legal space related to AI. First, Microsoft committed to assuming legal responsibility for code generated from their Github Copilot product. Github Copilot allows developers to build code much more quickly because it uses AI to generate portions of code rather than hand-writing it or, more realistically, copying it from Stackoverflow. However, many organizations were concerned that they would accidentally use copyrighted code that was output from Copilot. Now, Microsoft is saying, don’t worry, we got it. And any legal responsibility will now fall on them. This is a smart move by the software giant, especially as it continues to be the leader in AI amongst the biggest tech companies.

AI Essays

But, of course, AI is also being used for nefarious purposes. This is the first full academic year that students have access to ChatGPT, and boy, are things different already. Now, companies that use AI to help students cheat, such as writing essays for them, are advertising all over Meta and TikTok. According to a FastCompany article, Essay mills, companies that pump out school essays for a fee, “…are soliciting clients on TikTok and Meta platforms—despite the fact that the practice is illegal in a number of countries, including England, Wales, Australia, and New Zealand.” Since hallucinations are still a big issue for LLMs, essay mills claim to combine the power of AI plus human intervention to reduce or eliminate hallucinations from their essays. This seems analogous to the introduction of the calculator. When I was in school, we learned the basics of math and were soon allowed to use a calculator as we ventured into more complex mathematical topics. Maybe essay writing will not be that valuable of a skill in the future, so why put so much emphasis on it in school? Learn the basics, and then learn how to use AI to help write.

White House AI Agreement

Now, let’s talk about AI safety. This week, we have multiple stories from the biggest tech companies, starting with Adobe, IBM, and Nvidia, promising the White House they would develop safe and trustworthy AI. According to an article in the Verge, Other companies that committed to the White House include Cohere, Palantir, Salesforce, Scale AI, and Stability AI. These agreements are voluntary, so there’s no recourse if companies fail their commitments.

Google AI Fund

Next, Google has committed $20 million to its responsible AI fund. According to an Axios article, “Google says the project will "support researchers, organize convenings and foster debate on public policy solutions to encourage the responsible development of AI.” $20 million is a lot of money, but not for a company of Google’s size. I appreciate what they’re doing, but it will take a lot more to ensure safety from AI.

AI Political Ads

Google also took the step of requiring disclosure when political ads contain AI-generated content. With the upcoming elections, AI-generated content, especially deepfakes, will be an enormous problem for content platforms like Google and Meta. Both companies are significantly ramping up efforts to identify and prevent AI from being used for harm, fraud, and other nefarious purposes.

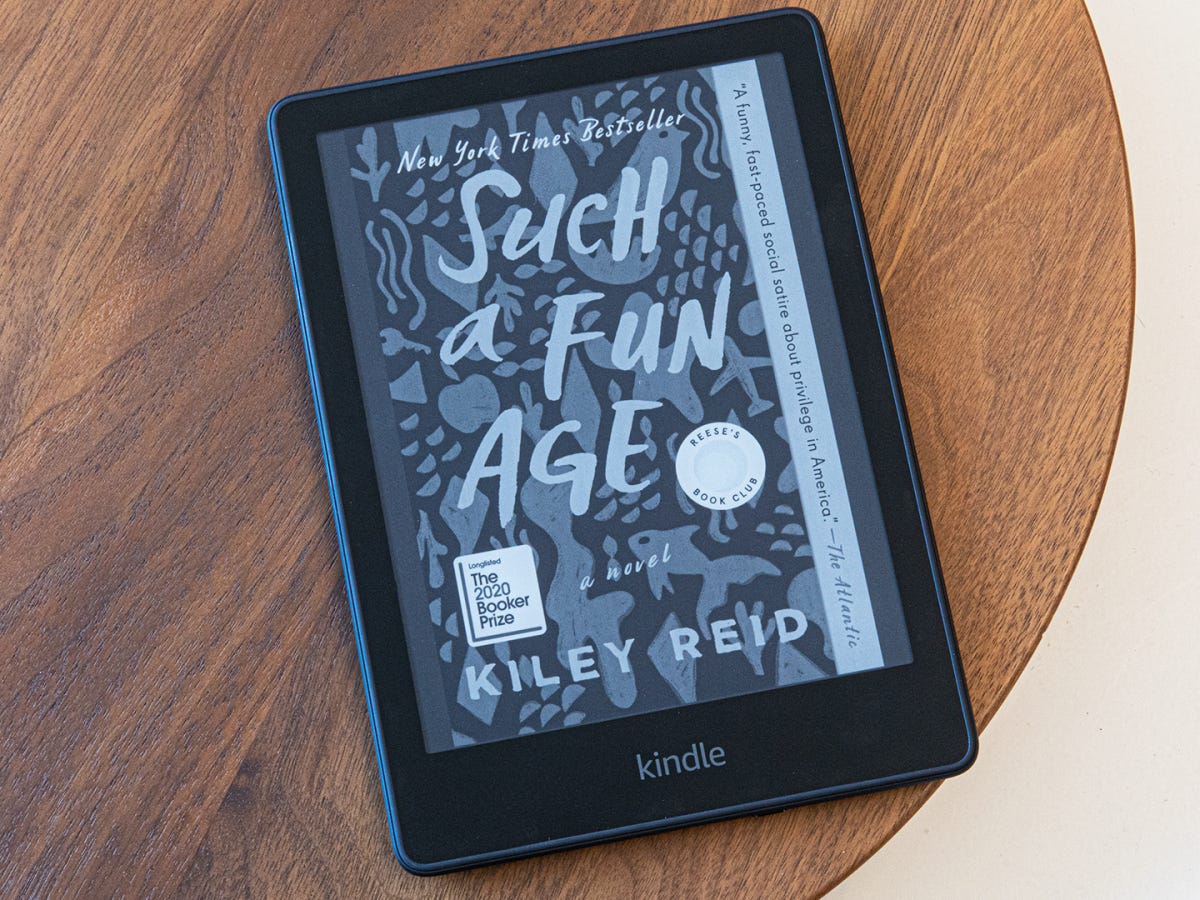

AI Authors Must Disclose

Continuing the theme of AI disclosure, Amazon enacted a new rule requiring authors to disclose the use of AI when books are published on Kindle. One of the earliest examples of commercialization of AI content was a children’s book written entirely by AI. Many authors were up in arms about this revelation, and it was eventually ruled that AI-generated work cannot be copyrighted. Now, AI-generated work will need to be disclosed. I’m a big fan of requiring disclosure whenever AI is being used, including writing, TV, movies, and even talking with a chatbot.

AI Water Usage

When most people think about the resources required in training AI systems, they think about GPUs and electricity, but almost no one thinks about water. But, according to an article from APNews, water is an important ingredient in the creation of AI, which is often overlooked. “Microsoft-backed OpenAI needed for its technology was plenty of water, pulled from the watershed of the Raccoon and Des Moines rivers in central Iowa to cool a powerful supercomputer as it helped teach its AI systems how to mimic human writing.” Water is used to help cool enormous server farms and apparently, “In a paper due to be published later this year, it’s estimated that ChatGPT gulps up 500 milliliters of water (close to what’s in a 16-ounce water bottle) every time you ask it a series of between 5 to 50 prompts or questions.” Microsoft, Google, and other tech giants are reporting significant water usage increases. This is another fascinating side effect of the AI race. More efficient and sustainable technology will be needed as the desire for more performant AI models continues.

AI Building Design

Enough with the doom and gloom. We have two stories this week that’ll make you smile. First, a student from MIT used AI to help design a building using less concrete. Concrete is one of the most widely used construction materials and produces significant greenhouse gases, responsible for 8% of the world’s emissions. Jackson Jewett, a 3rd year Ph.D. student, is writing his dissertation on developing algorithms to design concrete structures that use less material but maintain the same strength.

AI Identifying Cancer

And next, on the health side, AI continues to help doctors identify the world’s deadliest cancers at a better rate than just human doctors. The Optellum Virtual Nodule Clinic software is trained to identify cancer at a promising rate, using huge databases of CT scans as training data. With the help from this software, we’ll be able to detect cancer earlier and require fewer follow-up CT scans, which are costly and time-intensive. I’ll link the article in the description below so you can read the details.

AI Video Of The Week

Now it’s time for the AI video of the week! X user Jeff Synthesized has created another compelling video, remaking a scene from Pulp Fiction using AI this week. Here’s a short clip from it. Pulp Fiction is one of my favorite movies, so this video made me happy. Congratulations to Jeff for being this week’s AI Video of the Week again.

RussianGPT

For our last story, a new large language model out of Russia has reportedly displayed higher potential than GPT4. This comes from IT giant Yandex, a Russian company. It isn’t easy to know whether this is true since we cannot test it and I haven’t seen a way to play with the model. It doesn’t seem as though this model will be open-source, either. According to Menafn.com, “Dubbed YandexGPT, the Russian bot’s “basic model steadily surpasses” the US ChatGPT 3.5 version about creating answers in Russian.” We know the AI race is not going to be based on the tech giants in the US alone, AI supremacy is a global race with China and the US leading, and now apparently Russia has come with a strong showing.

You wrote “ they displayed two potential alien corpses found in Cuscu, Mexico.” That is wrong at so many levels. First, is call Cusco and second is not in Mexico, it is in Peru. This is so disappointing because it shows that you could not bother in doing a little research to verify what you write. Apparently, the forward future is, if not ignorant, at the very least, lazy.